Evidence for Learning tested QuickSmart Numeracy (‘QuickSmart’), an intensive supplemental maths program aimed at increasing maths automaticity and fluency of students’ basic maths operations (addition, subtraction, multiplication and division) to improve maths achievement. The program is designed to support students’ ability to recall basic maths information quickly in order to offload cognitive capacity (or ‘working memory’) to engage in higher-level thinking and learning skills.

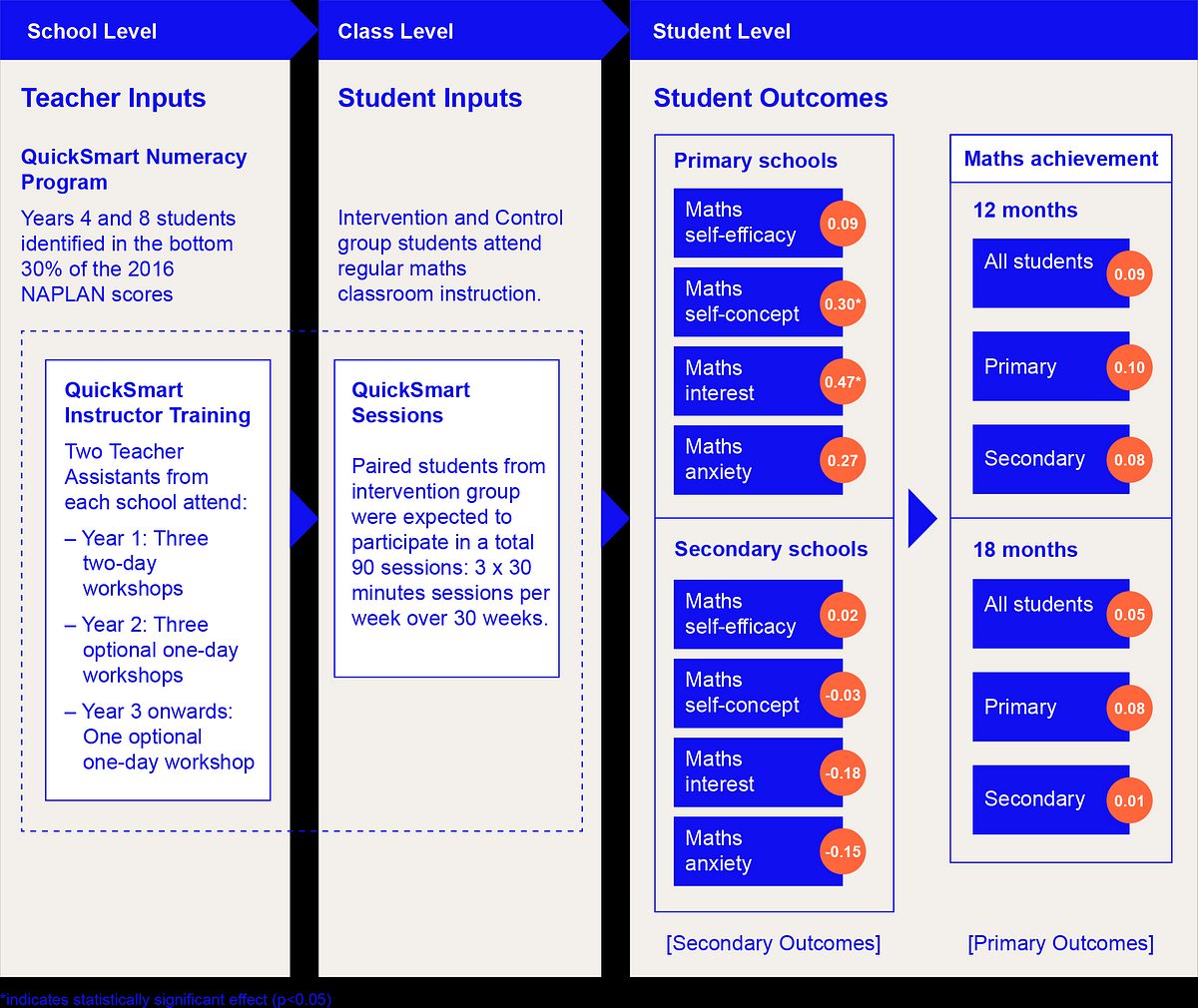

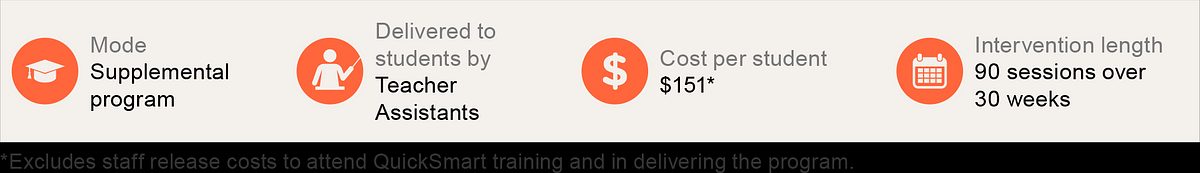

QuickSmart is designed as a 90-session intervention delivered typically by Teaching Assistants (TAs) trained as QuickSmart Instructors over 30 weeks (3 x 30 minute sessions per week). The sessions involve teacher-led discussion and questioning on number facts and using recall strategies and activities (such as flash cards and speed sheets) to practice simple number facts and operations, before moving on to problem-solving strategies and activities.

This evaluation was set up as an ‘effectiveness’ trial to assess if QuickSmart has an additional impact on maths outcomes over a control group with only regular maths instruction, when delivered under everyday conditions in 70 Primary and Secondary classrooms across 23 schools. The delivery of the intervention was led by SiMERR, University of New England and targeted Year 4 and 8 students from Sydney Catholic Schools in New South Wales.

QuickSmart, as experienced in this trial, did not have an additional impact on maths achievement. Overall and for Primary students, on average, there was one month’s additional gain in maths however this trial was not commissioned to detect this level of difference, meaning the difference was not statistically significant and needs to be treated with caution. There appear to be higher gains for students attending more QuickSmart sessions but we cannot conclude this finding with confidence as the numbers of such students was too low. There was however strong evidence that QuickSmart improved Primary students’ maths interest and maths confidence, although this did not extend to self-efficacy. No statistically significant difference was detected for Secondary students’ cognitive and affective outcomes. The results of this trial have a high security rating.

Schools in this trial experienced difficulties achieving 90% (81 sessions) or more of the 90 sessions in the program. Whilst most schools received at least 50% (45 sessions) of the full program, 12% of Primary students and 0% of Secondary students achieved the recommended 90% or more of the full program. Evidence from the process evaluation found schools’ difficulties in timetabling QuickSmart sessions, coordinating transitions in and out of QuickSmart sessions and concerns from teachers and students about missing class time and activities when attending QuickSmart although these were more easily managed in Primary schools. Recruitment challenges and a delayed commencement of the intervention may also have affected some schools’ ability to achieve 90 sessions in 30 weeks in one school year.

The findings from this trial do not mean that the underlying concept of maths automaticity does not improve maths outcomes of students struggling in maths. Research suggest that maths automaticity is an important skill however students need to be able to use it to link and apply effectively to maths concepts and problems. As there was insufficient number of students who achieved the full program of 90 sessions in this trial, we cannot conclude if the program would work when delivered as prescribed. Further evaluation is needed to generate more evidence in this area.

Subject area: Numeracy

The logic model below reflects the key elements evaluated. It reflects the anticipated change in students’ self-concept, interest, anxiety and self-efficacy in maths that result in changes in overall maths achievement on the ACER’s Progressive Achievement Test – Mathematics (PAT‑M). Baseline measures were taken in March 2017, with an 18-month delayed post-test undertaken in May 2018, six-months after completion of the intervention period in December 2017. The analysis also assessed the effects of QuickSmart using immediate follow-up PAT-Maths results at 12 months (Term 4, 2017). Interviews with maths teachers and QuickSmart Instructors from this sub-sample provided deeper understanding of how the QuickSmart program was implemented in their schools.

* Refer to Appendix A, used to translate effect size into estimated months progress.

** Refer to Appendix B, for E4L independent assessment of the security rating.

*** When staffing costs are included, the cost rating for QuickSmart is Moderate.

This project involved 23 schools (12 Primary and 11 Secondary) from the Diocese of Sydney. The ICSEA1 of the majority of schools were marginally above the ICSEA national value of 1000 with Primary schools ICSEA mean at 1,054 and Secondary schools at 1,028 and students were identified in the bottom 30% of their national cohort in mathematics in the 2016 NAPLAN scores.

QuickSmart is developed by the SiMERR National Research Centre at the University of New England. Schools and systems interested in the program should consider piloting QuickSmart at a smaller scale and planning a longer period to ensure sufficient time to deliver the program. This will allow schools to determine the feasibility to implement as prescribed at 90% or more (81 out of 90 sessions), and using the evidence from this to determine feasibility of implementing it more widely.

QuickSmart costs $10,500 (excl. GST) per school in the first year, which includes training, access to online resource and telephone support, equipment and resources, and a three-year license to the OZCAAS program required for delivery and assessment. The cost per student is estimated at $151 per year, based on 25 students per year undertaking the intervention over three years. When costs for TA cover are included for QuickSmart training, the cost rating is $1,007 per student. This evaluation is supported by the Sydney Catholic Schools, and the 23 schools involved were allocated funding of $8,600 to support the implementation of the QuickSmart program and were not required to pay the standard start-up fees.

Key conclusions

- In this trial, QuickSmart did not have an additional impact on maths achievement compared to regular classroom instruction and support. There was a small positive gain, equivalent to one month’s additional learning, however this trial was not commissioned to detect this level of difference2 meaning the difference was not statistically significant.

- When models were adjusted for intervention exposure, there was a small increase in the effect on student achievement (indicating that exposure levels have some effect on outcomes), however this effect was not statistically significant.

- Sub-group analysis displayed a small but not statistically significant positive effect for Primary students. The gain was equivalent to one month’s additional learning. There was no additional effect for Secondary students.

- Schools faced challenges achieving the prescribed program exposure of 90 sessions within 30 weeks. Primary students, on average, received 73% (or 66 sessions) of QuickSmart’s prescribed 90 sessions over 30 weeks, while Secondary students received 49% (or 44 sessions). Only 35% of Primary students and 4% of Secondary students received more than 75% (or 67 sessions) of the prescribed QuickSmart sessions.

- Sound implementation of QuickSmart appeared more feasible within Primary schools than Secondary schools. Both settings struggled with transitions into and out of the classroom, and concern about the subject matter students were missing out on as a result of QuickSmart was expressed across Primary and Secondary settings.

- Primary teachers were positive about QuickSmart and reported that it appeared to help students gain more confidence participating in their maths classrooms. QuickSmart had a statistically significant positive impact on Primary students’ maths self-concept (effect size g = 0.30) and interest in maths (effect size g = 0.47), however there was no evidence of impact on self-efficacy (effect size g = 0.09). There were no statistically significant intervention effects on Secondary students’ cognitive and affective outcomes.

Evidence for Learning has provided its own plain English commentary on implications based on the evaluation findings and considerations for schools and systems.

QuickSmart E4L Commentary

Uploaded: • 211.2 KB - pdfSiMMER and Sydney Catholic Schools have published their own statements in response to the Evaluation Report. For transparency for readers, we provide links to their statements below.

SiMERR’s statement is here and Sydney Catholic Schools’ statement is here.

These statements do not form part of Evidence for Learning’s reporting for this Project.

The following ‘practitioner-friendly’ reports are free to access and download.

QuickSmart Evaluation Report

Uploaded: • 2.5 MB - pdfQuickSmart Executive Summary

Uploaded: • 636.2 KB - pdfQuickSmart Evaluation Protocol

Uploaded: • 495.6 KB - pdfQuickSmart Statistical Analysis Plan

Uploaded: • 526.6 KB - pdfThis evaluation report and supporting materials are licensed under a Creative Commons licence as outlined below. Permission may be granted for derivatives, please contact Evidence for Learning for more information.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Licence.

1. The Index of Community Socio-Educational Advantage (ICSEA) provides an indication of the socio-educational backgrounds of students and is set at an average of 1000. The lower the ICSEA value, the lower the level of educational advantage of students who go to this school.

2. This trial was powered to achieve a Minimum Detectable Effect Size (MDES) of 0.24 at randomisation, which meets the high padlock rating criteria for MDES of <0.3.