Back in March 2015, I described some key ideas and resources about evidence in education I encountered at the International Congress for School Effectiveness and Improvement (ICSEI) conference in Cincinnati, Ohio. I briefly summarised a keynote address by Dr Vivien Tseng of the William T. Grant Foundation and a presentation on Ontario’s Knowledge Network for Applied Educational Research (KNAER). In many ways, that conference was my introduction to the study of research use in education, which has informed our thinking as we’ve shaped Evidence for Learning (E4L).

Building on that work, this February I was privileged to be invited to present E4L’s work at the William T. Grant Foundation’s annual Use of Research Evidence meeting in Washington, D.C. (I also had a few other meetings while I was in North America, including with the KNAER team). The meeting gathered more than 100 researchers, policy-makers, and grant-makers together with three goals:

- Advance the field’s efforts to build theory, methodological tools, and empirical evidence on ways to improve the use of research evidence

- Support a growing network and field of inquiry related to studying the use of research evidence

- Share lessons learned from this meeting with the broader field to improve the use of research evidence.

It’s in the spirit of that third goal that I’d like to share a few things I learned over my week in North America and some initial thoughts about how they apply to our work at E4L. I hope these insights will be useful to Australian educators and system leaders as they think about using research evidence to improve policy and practice.

A space between research and practice: brokerage

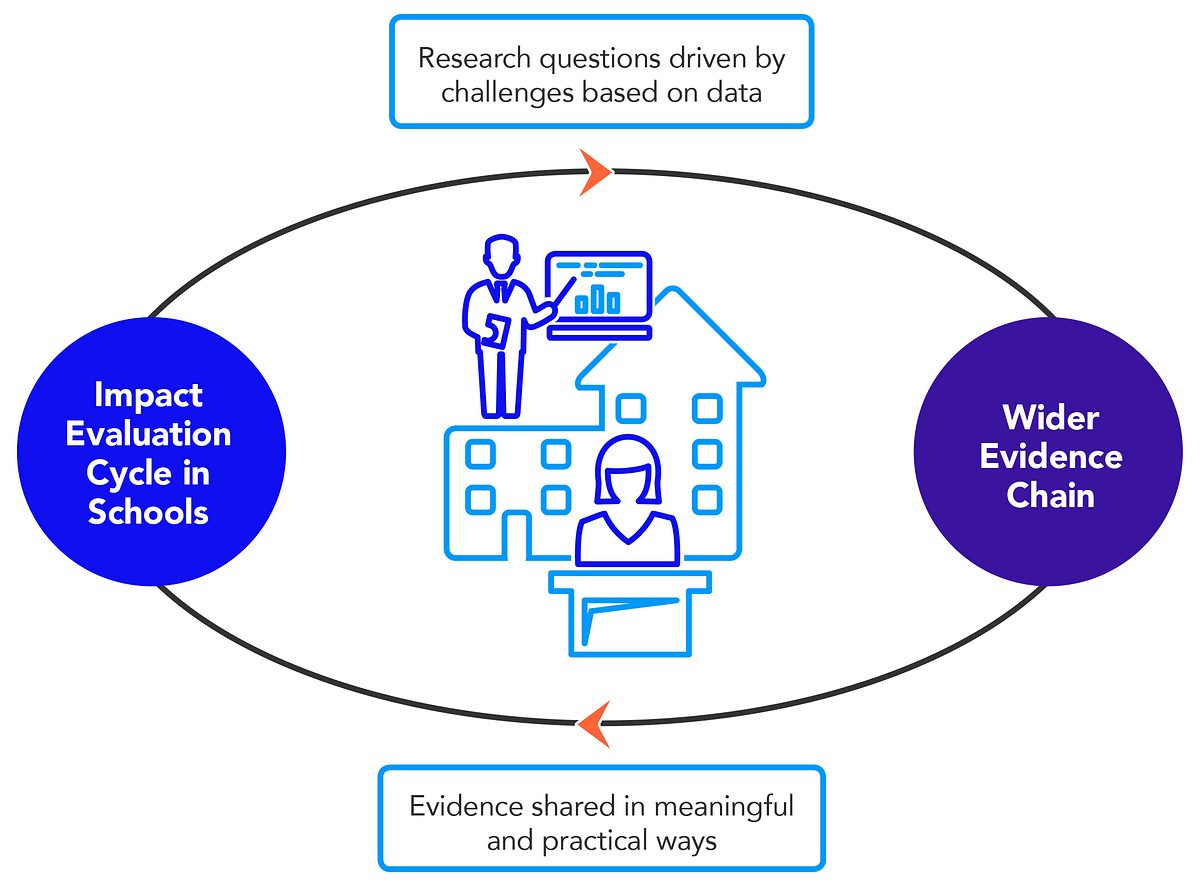

First, the meeting crystallised a schema I’ve been developing about the connection between academic research and research use in schools. At E4L, we think of ourselves as trying to strengthen the evidence ecosystem for education. Our graphic showing the evidence ecosystem (below) has a circle on the left for activity in schools, with a circle for the wider evidence chain on the right. These two circles are connected by two arrows, one showing research questions being driven by practical challenge and data in schools and the other showing evidence being shared from research in meaningful and practical ways.

I now recognise these arrows as marking out a third space between research and practice: brokerage. The William T. Grant meeting was largely devoted to sharing emerging insights about this space, and my other meetings gave me further insights.

What does the brokerage space look like?

One thing that became clear at the William T. Grant Foundation meeting is that there is, as yet, no agreed definition of brokering, even among the academics studying the field of research use. I’m beginning to think about this brokerage space as a decentralised network of activity. The brokers in this network include many organisations and people that, either consciously or unconsciously, share research findings that ultimately find their way to practitioners. Some of these brokers are much closer to practice than others. Indeed, some may be practitioners themselves. Our Education Endowment Foundation (EEF) colleague Jonathan Sharples has noted that these brokers are particularly effective in helping schools change practice based on evidence, as they have practical insights that other brokers can’t offer and are easier for other educators to trust. Other brokers may be in advocacy organisations, education systems or the media.

Broadly, I see three roles that organisations and people in the brokerage space play:

- creating resources that distil and communicate evidence from research;

- convening partnerships between researchers and practitioners; and

- supporting practitioners to engage with evidence and test its impact locally.

On my trip, I learned of many examples of each type of role:

Resource Creators

Examples of Resource Creators include the EEF with the Toolkit, individual project evaluation reports and guidance reports; the US Institute for Education Sciences What Works Clearinghouse, which includes individual project evaluation reports and practice guides; and Evidence for ESSA, a new resource by Bob Slavin’s team at Johns Hopkins University that summarises the evidence on individual education programs available in the US.

Partnership Convenors

Examples of Partnership Convenors include the William T. Grant Foundation, which funds Research-Practice Partnerships and shares learning about how to make them more effective; the Carnegie Foundation for the Advancement of Teaching, which convenes under a Networked Improvement Communities model on specific shared problems of practice across schools; and Ontario’s KNAER, now embarking on Phase 2, which convenes knowledge networks among practitioners and researchers aligned to four province-wide reform priorities and shares emerging insights about how to mobilise knowledge.

Practice Supporters

Practice Supporters include the EEF’s emerging work of funding Research Schools, which, as my colleague Tanya Vaughan has recently described, are schools that take on responsibility for supporting other schools to use research evidence to improve outcomes for their students. Results for America’s Evidence in Education Lab is beginning work like this with six school districts across several US states, too.

What insights are emerging about effective brokering?

Two presentations from the William T. Grant meeting gave insights that I think would be valuable to share.

How findings move, or don’t: ‘Dead-ends’, ‘echo chambers’, and ‘funnels’

Jenna Neal, from Michigan State University, shared findings from the Michigan School Program Information Project (MiSPI). This study looked at ways in which senior leaders in Michigan school districts got information about research on programs they were considering for their district. Neal and her colleagues found that in some cases information from researchers was not getting into districts because there were ‘dead-ends’ – people who were looked to as good sources of information who were not aware of research evidence; or there were ‘echo chambers’ – in which district leaders shared information about what was happening within their district but weren’t aware of knowledge from outside. Interestingly, in the cases where district leaders did get information, there were often ‘funnels’ – people who were already in high-leverage positions in their networks and had access to research evidence, and who thus linked research evidence to many other people, both directly and through others. For E4L, learning about this highlighted the importance of working with existing networks and getting high-quality, accessible evidence to people who are already in high-leverage positions.

Absorptive capacity

Once evidence gets to schools, ensuring it has an impact on practice is still a challenge. There is still some challenge in how it has an impact on practice. Caitlin Farrel and Cynthia Coburn, co-investigators at the National Center for Research in Policy and Practice in the US, shared their work studying the absorptive capacity of one US school district. They defined absorptive capacity as ‘the ability to recognize the value of new information, assimilate it, and apply it in novel ways as part of organizational routines, policies, and practices’ (2016). They identified four features within a district that were good indicators of its ability to partner effectively with organisations bringing in external knowledge: ‘prior knowledge, communication pathways, strategic knowledge leadership, and resources to partner. I imagine these features, and the idea of absorptive capacity, would be useful for schools and networks thinking about whether they’re ready to engage with a specific piece of educational research. Their full paper on these concepts is titled, ‘Absorptive capacity: A conceptual framework for understanding district central office learning,’ and was published in The Journal of Educational Change in November 2016.

A bunch of interesting ideas, but so what?

So, what does all this mean for us, back here in Australia?

It has also been helpful to me in clarifying E4L’s role in trying to strengthen the evidence ecosystem for education. To date, our role has been closer to the evidence base than to schools, but that is changing as our work extends out beyond the Teaching & Learning Toolkit and the Learning Impact Fund to produce more detailed practice guides and other tools for use in schools. This is why it will be very important for us to engage with teachers and school leaders in the coming months. We’ll need to make sure we glean practical insights from schools about how to bring research insights to life to benefit students.

In that regard, I’m heartened by the recent engagement on social media and podcast from Deb Netolicky, Dan Haesler and others. As Deb wrote in her response to my response to her, it’s useful to engage in debate and discussion, to hug your haters so to speak (though I’m not sure whether I’m supposed to be He-Man or Skeletor!).

I ended my post about ICSEI in 2015 by writing that the ‘Toolkit, the work of both the KNAER and the William T. Grant Foundation, and the ICSEI conference are all indicative of the larger cultural shift in education toward evidence-based practice. It’s a transition that happened in medicine about a century ago, with astounding results for the health and longevity of people around the world. I have no doubt that the results in education will be equally far-reaching, and I’m glad I can play at least a small role in that transformation.’

I still believe in the far-reaching benefits of teaching and learning being better informed by evidence, and I’m still happy to play a small role.

John Bush is the Associate Director of Education at Social Ventures Australia and part of the leadership team of Evidence for Learning. In this role, he manages the Learning Impact Fund, a new fund building rigorous evidence about Australian educational programs.

Other E4L articles of interest

Making progress: One school’s journey from struggling to high performing by Dr Tanya Vaughan

Reflections by Dr Jonathan Sharples on the evidence system in Australia by Dr Jonathan Sharples